Massive parallel LLM inference is underrated.

If you use AI today, you’re likely:

- Asking ChatGPT or Claude to do something for you

- Navigating away to thumb on another task for just long enough

- Navigating back to judge its output to then either:

a. Accepting it, implementing the solution, and moving on

b. Rejecting it and trying to reason with the AI again, and again, and again…

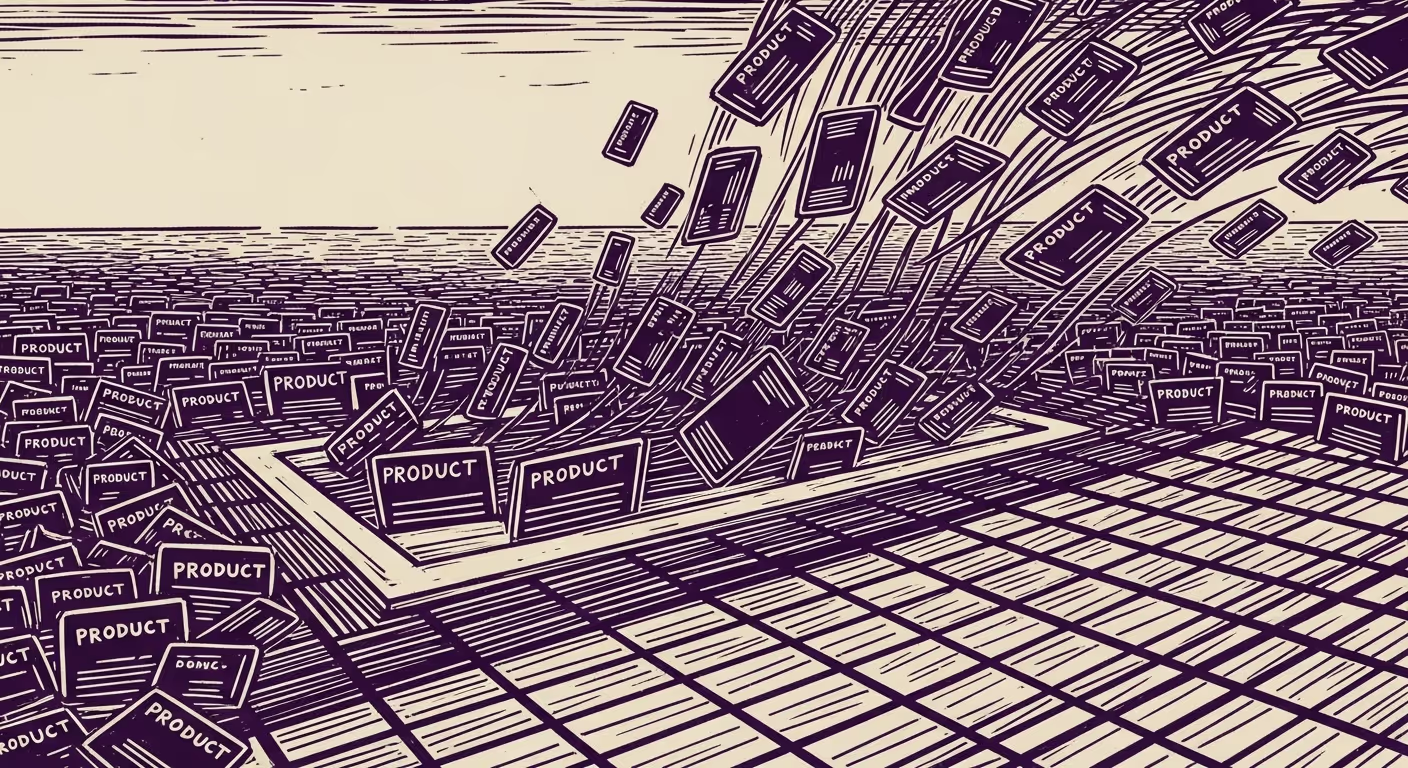

This is a waste of time! The future of work looks like StarCraft or Age of Empires.

Imagine you have an allocation of 10,000 requests—rather than a single prompt—at 100,000 tokens per second. (For context, the average GPT interaction uses 50-150 tokens per turn.) You’re directing this computational firepower to solve problems and create solutions, acting as the commander with agentic systems as your units. If the point of battle is to win, you need asymmetric advantages. But this isn’t just about sheer firepower or brute force—though that’s part of it. The real power lies in your ability to direct and manage these forces strategically to maximize their effectiveness.

Like any real working environment, you don’t spread capacity evenly across 200 different tasks. You provision agents to match the problem’s nature. Some tasks stand alone, while others form clusters—interconnected webs where complexity and complication (two distinct beasts) hide cloaked in an ether of ethers.

Yes, each LLM has some probability of finding a solution. But you don’t just want one correct solution per problem. You want enough to get multiple, then mix and match them into one extremely high quality solve. Think of it like Monte Carlo sampling, you’re hedging against randomness as you explore the solution space.

Data labeling is a fantastic base case example

As a familiar and persistent example, we built a demo app that categorizes images of clothing using parallel LLM calls. As a search company, being able to structure arbitrary datasets across different sources greatly improves our ability to index and manipulate information into awesome AI experiences for our customers’ customers.

But here’s what’s actually cool: you could photograph all your belongings and have AI instantly sort which items are worth more than $50. With humans, you need dozens of people working in parallel to finish in reasonable time.

Google spent years tricking hundreds of millions of people into labeling data through CAPTCHAs. Now you can leverage the years of human effort that went into this and accomplish similar tasks for much less in a few hours. As you might also assume, this will get faster and cheaper as LLMs improve.

Clothing Image Labeler

Upload images of clothing and automatically categorize them. Powered by Trieve.

Click or drag images here to upload - PNG, JPG, and WEBP supported.

Parallel compute should be all of the time

We should be using parallel compute for everything AI. We should be supervisors, not spectators.

Right now, as a software engineer, I open my IDE, prompt an agent to edit code, then watch it work. Strong WALL-E fitless human vibes; disengaged, passive.

I don’t want this. I want to be in flow state, hyper-engaged and forward-leaning. I want to feel and produce like Ender commanding an entire fleet.

It should be trivial to provision multiple LLMs with high variance to tackle tasks in parallel. If AI can generate product photos, I want to pick 3 styles and deploy 6 agents, 2 per style-generating image sets—simultaneously. If something’s going wrong, I can zoom in and intervene while they work. The feedback I give one agent can be immediately applied to the others, allowing them to adjust their outputs in real-time.

This is similar to a strategy some employ on Fiverr when they hire multiple freelancers for the same project to judge the quality of their work and pick the best candidate.

New tools are needed

You can sort of replicate this with git worktrees for programming, but it’s clunky. We need new software designed for managing parallelized general intelligence.

What we have now feels like a horseless carriage, we’re mimicking old patterns instead of embracing new possibilities. The future UX for AI isn’t watching a single agent work. It’s commanding hundreds simultaneously.

Maybe these tools will be vertical-specific. Maybe they’ll be general-purpose. Either way, I’m excited to see what we build and get it in your hands.